Robust robotics evaluation requires simulations, running at scale. NVIDIA's Isaac Sim, now integrates with ReSim's evaluation platform to automate and scale testing. This collaboration offers robotics developers swift and economical insights into their advancements.

NVIDIA Isaac Sim empowers robotics developers with advanced, scalable simulation for accelerating AI-powered robotics. Built on NVIDIA Omniverse, Isaac Sim delivers highly realistic, physically accurate environments for developing, testing, and validating robots virtually before deployment. But simulation availability is only as useful as the insights engineers are able to glean from sim testing.

ReSim orchestrates testing at scale with compute costs and efficiency as our northstar. ReSim then automatically outputs metrics with every single test, giving roboticists at-a-glance access to the data that matters most to inform their testing and development. ReSim closes the loop by enabling quick comparisons between tests, and orchestrating parameter sweeps to dive deep into critical variables.

Scale Isaac Sim testing using ReSim

In scaling simulation workloads for autonomous systems, we’ve learned a few practical lessons about running complex simulations reliably and efficiently in the cloud. Whether it’s node reuse or container image mirroring, there are plenty of opportunities to save time and cost - we've built ReSim to make those practices accessible out of the box.

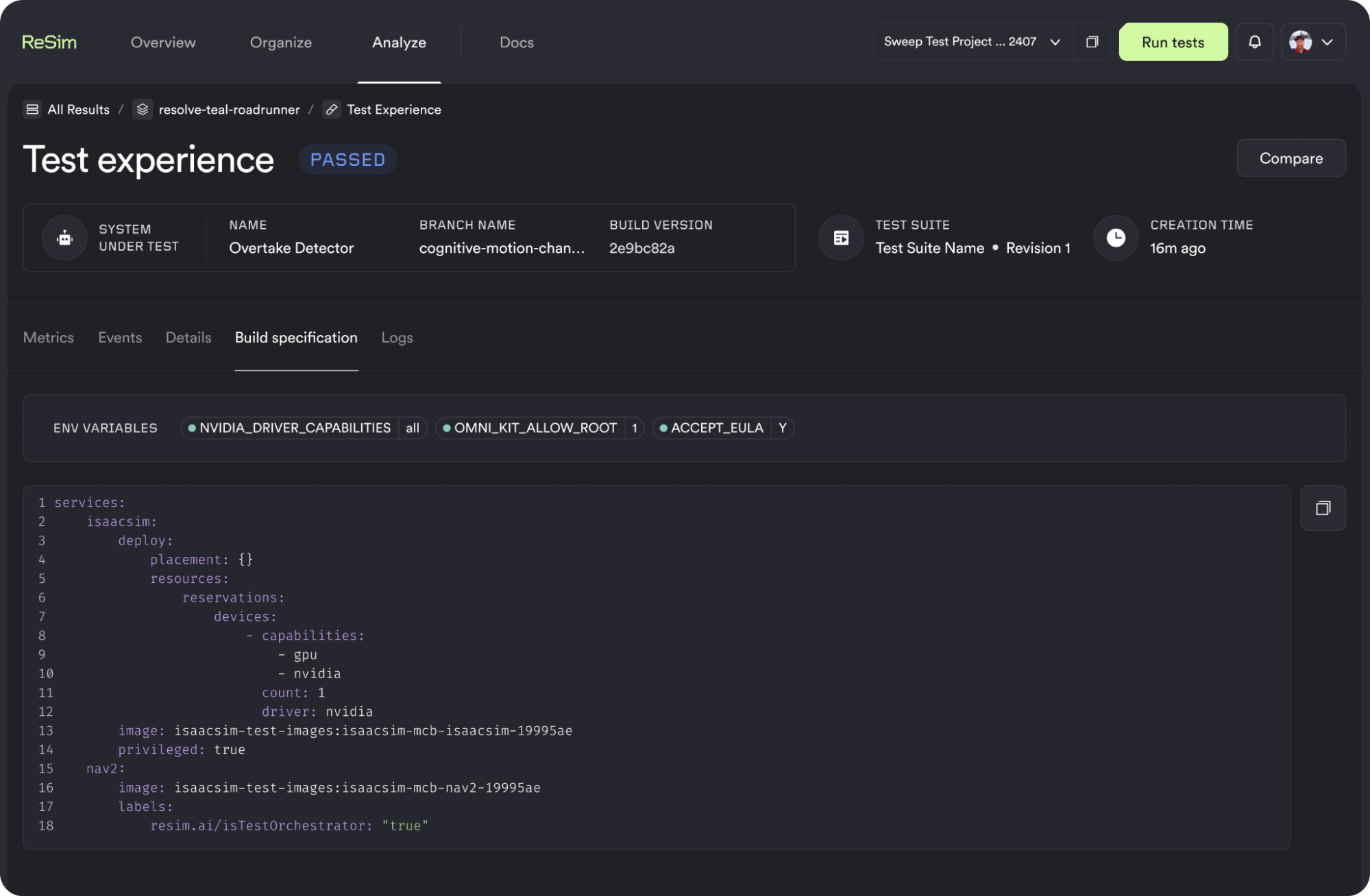

Most real-world systems aren’t confined to a single container. Coordinating multiple nodes for swarm robotics, supporting cloud services, or numerous compute nodes can get complicated, especially if you’re relying on tools like Docker-in-Docker. ReSim supports multi-container builds natively, making it easier to model realistic deployments.

If you’re using Isaac Sim, getting started is straightforward: use the standard container image and add it to your service configuration. This avoids the overhead of rebuilding monolithic containers just to integrate with the rest of your stack. Small tip: pre-caching shaders inside your image can shave up to five minutes off test startup!

Managing large asset libraries and scene files in simulation often leads to redundant transfers and long startup times, especially when working in the cloud. ReSim supports experience caching out of the box: when you include your USDs and asset sets as part of the experience input, they’re stored and reused across runs. This helps reduce cloud transfer costs and avoids the repeated wait time of fetching assets for every test.

At-a-glance insights with every test ReSim runs

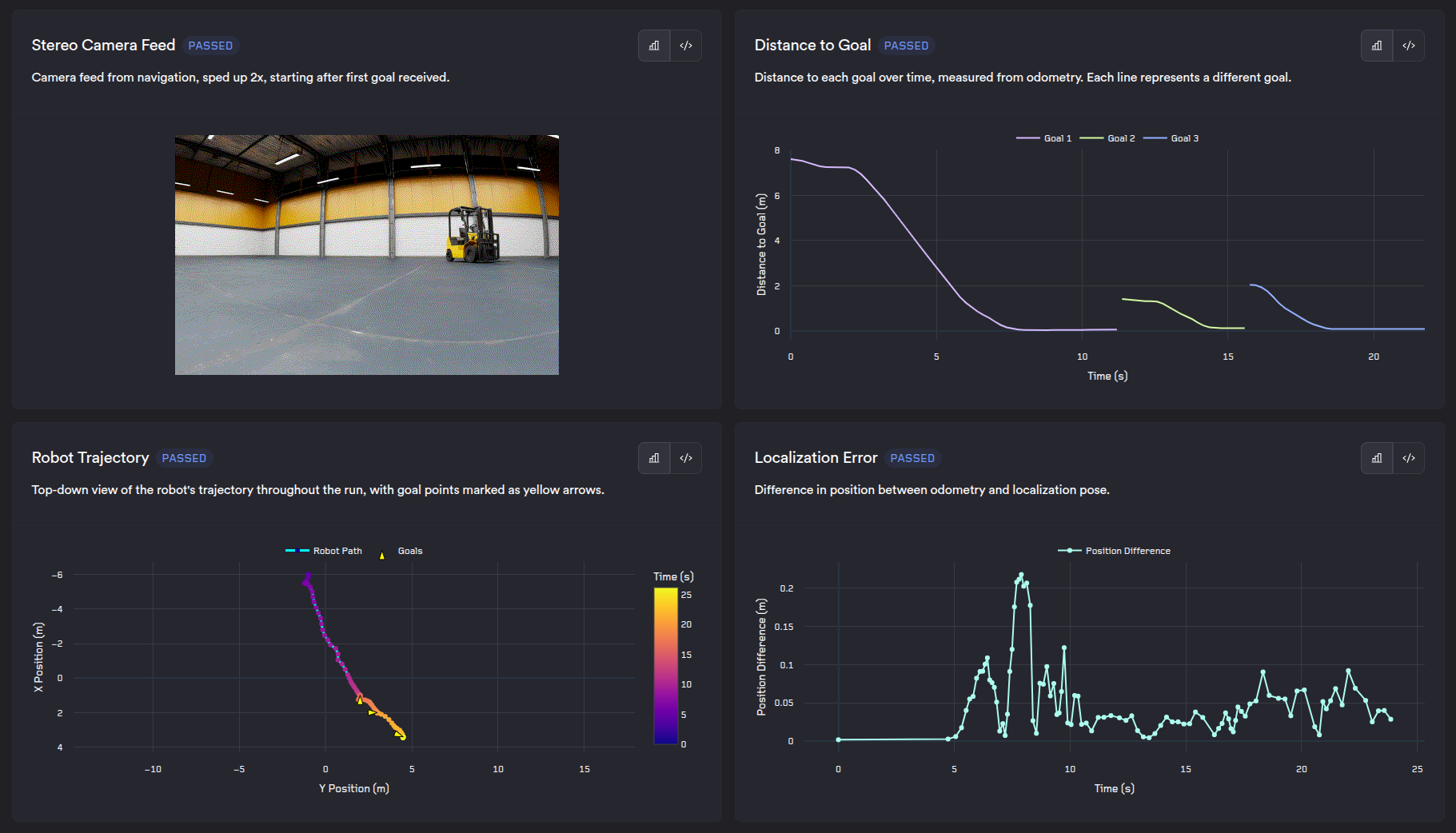

Simulation is a valuable way to evaluate system behavior without relying on physical hardware, but pass/fail results only tell part of the story. ReSim makes it easier to go deeper by surfacing metrics and visualizations that help explain why a test failed, not just that it did. This kind of insight can be especially useful for debugging regressions, spotting performance issues, or validating system changes. By integrating these analytics into the test workflow, teams can iterate faster and rely more fully on simulation-driven development.

The ReSim interface makes it easy to review test results. Each test run includes relevant metrics in a single view, so developers can quickly spot issues without bouncing between tools and dashboards.

In ReSim’s above example of implementing Isaac Sim’s ROS2 Navigation demo, we extracted metrics like the robot’s distance to its goals, a top-down trajectory view, and the localization error relative to ground truth. Paired with the video playback of the robot, this helped explain a behavioral issue: the robot was stalling near its goals because it struggled to align with the target pose.

Sometimes, you won’t have extracted the exact metrics your team needs to understand a failure. When you need deeper visibility, ReSim supports direct integration with tools like Foxglove, allowing you to stream results from your primary site for interactive inspection. If you're using ReRun, you can open .rrd files directly in the viewer without extra steps. For other visualization or log analysis tools, ReSim's outputs are accessible from S3, making it straightforward to plug into your existing workflows.

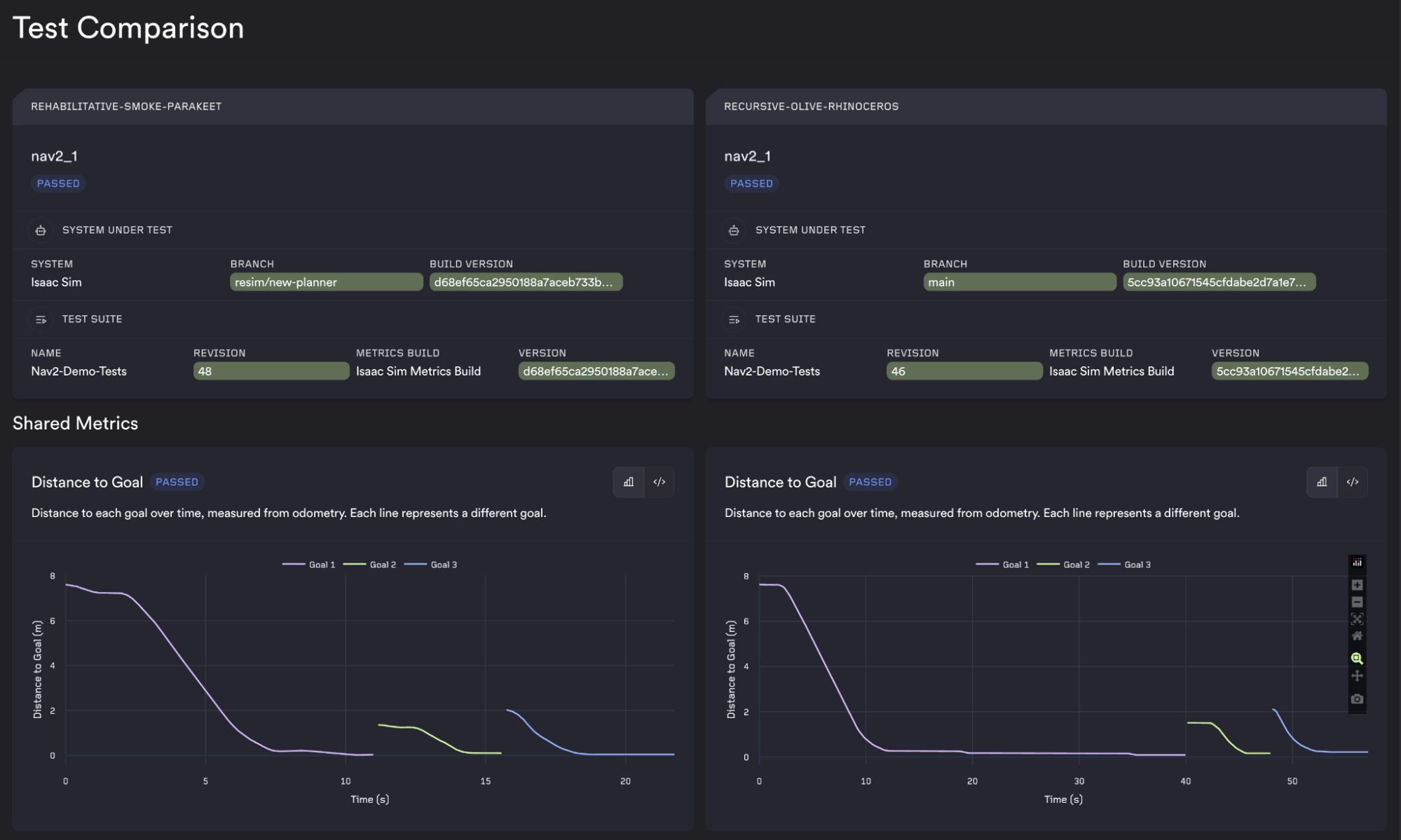

Easy exploration and iteration of test results leads to faster development

ReSim is designed to support fast, repeatable testing workflows, whether you're debugging a single issue or validating performance across a range of configurations. Using our GitHub Action, teams can integrate ReSim directly into their CI pipelines to automatically build and test on every pull request, schedule nightly regression tests, or kick off exploratory runs as needed.

When tuning or validating behavior, ReSim makes it easy to run parameter sweeps. You can define input matrices - for example, varying the goal checker tolerances, controller limits or localization parameters. ReSim will execute each configuration in parallel, logging results, metrics, and visualizations for side-by-side comparison. This helps surface sensitivities and edge cases early in the development cycle.

Beyond ReSim’s core platform, our Professional Services team brings experience working with autonomy and robotics teams across the industry. We can help customize test environments, integrate domain-specific tools, or scale up your simulation infrastructure. Please reach out using our “Contact Us” form above if you’d like to learn more.

Acknowledgements

Many thanks to the NVIDIA Isaac team, in particular Austin Klein, Vikash Kumar, and Spencer Huang, for supporting this integration and pairing with us to make batch running in ReSim as efficient as possible!